Monthly Archives: March 2014

An Interesting Truth

One day, in the Louisiana National Guard…

Boudreaux, the smoothest-talking Cajun in the Louisiana National Guard, got called up to active duty.

Boudreaux’s first assignment was in a military induction center. Because he was a good talker, they assigned him the duty of advising new recruits about government benefits, especially the GI insurance to which they were entitled.

The officer in charge soon noticed that Boudreaux was getting a 99% sign-up rate for the more expensive supplemental form of GI insurance. This was remarkable, because it cost these low-income recruits $30.00 per month for the higher coverage, compared to what the government was already providing at no charge. The officer decided he’d sit in the back of the room at the next briefing and observe Boudreaux’s sales pitch.

Boudreaux stood up before the latest group of inductees and said, “If you has da normal GI insurans an’ you goes to Afghanistan an’ gets youself killed, da govment’ pays you benefishery $20,000. If you takes out da suppmental insurans, which cost you only t’irty dollars a munt, den da governmen’ gots at pay you benefishery $400,000!

“Now,” Boudreaux concluded, “which bunch you tink dey gonna send at Afghanistan first?

Top Attractions In Each of the 50 States

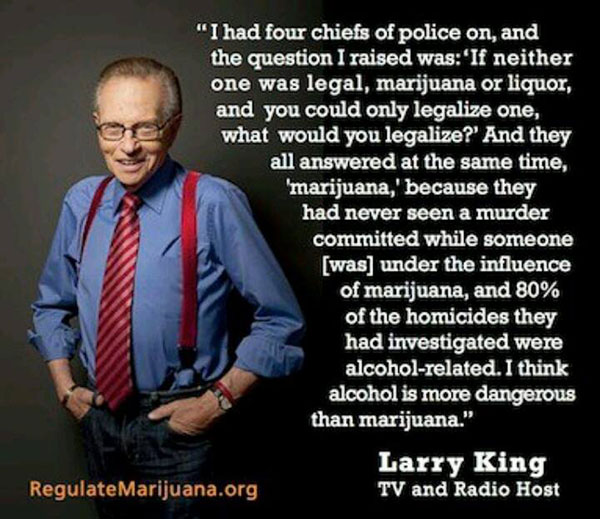

If Company Logos Were Really Honest

Soda Flavors That Have To Make You Wonder

The Story of Aspirin

The effects of aspirin-like substances have been known since the ancient Romans recorded the use of the willow bark as a fever fighter. The leaves and bark of the willow tree contain a substance called salicin, a naturally occurring compound similar to acetylsalicylic acid, the chemical name for aspirin.

Even as far back as 400 B.C. Hippocrates recommended a tea made from willow leaves. It wasn’t until the 1800’s that scientists discovered what was in the willow tree that relieved pain and reduced fever. The substance was named salicylic acid. But when people suffering from pain took the salicylic acid, it caused sever stomach and mouth irritation.

In 1832, a thirty-seven-year-old French chemist named Charles Gergardt mixed another chemical with the acid and produced good results, but the procedure was difficult and took a lot of time. Gerhardt decided the new compound wasn’t practial, so he set aside.

Sixty-five-years later a German chemist, Felix Hoffmann, was searching for something to relieve his father’s arthritis. He studied Gerhardt’s experiments and “rediscovered” acetylsalicylic acid–or aspirin, as we now know it.

Dr. Lawrence Craven, a California general practitioner, in 1948, notices that the 400 men he prescribed aspirin to hadn’t suffered any heart attacks. He regularly recommends to all patients and colleagues that “an aspirin a day” could dramatically reduce the risk of heart attack.

In 1971 John Vane began his work on aspirin. Over a weekend he conceived the notion that the mysterious drug might work by inhibiting the generation of prostaglandins. He turned again to his bioassay system for the answer and within a few days he had convinced himself and his colleagues that this indeed was the missing mechanism of action.

Aspirin reduces the production of hormone-like substances called prostaglandins, which are produced in tissues throughout the body. Prostaglandins have many functions. They are part of the chemical messenger systems involved in feeling pain, fever, the redness and swelling that can accompany injuries, and even in contracting certain muscles, for example, the uterus. Since aspirin lowers the amount of prostaglandins, it can help alleviate conditions like pain, fever and the discomfort of menstrual cramps. Aspirin also reduces production of substances involved in the early stages of our body’s blood clotting mechanism. This is why doctors may prescribe aspirin, as part of a regimen including diet and exercise, for appropriate individuals with cardiovascular disease.

Today over 70 million pounds of aspirin are produced annually all over the world, making it the world’s most widely used drug. Americans alone consume over 50 million aspirin tablets every day. That’s over 15 billion tablets a year.

Foods That Aren’t What They’re Thought To Be

Pringles

These weirdly homogenous chips in a can are far from pieces of fried potato. While they do technically count as potato chips in the U.S., the company successfully convinced the U.K. government that Pringles aren’t potato chips so that the product wouldn’t be taxed as a luxury item. Pringles are made from a mix of corn, rice, wheat, and potato flakes that are mushed together and then put into a machine to give them their unique shape. Pringles are actually less than 50 percent potato.

Aunt Jemima “maple syrup”

Aunt Jemima’s maple syrup is actually for the most part corn syrup. The first three ingredients are corn syrup, high fructose corn syrup, and water. Nothing maple is even in the stuff, unless that’s covered by “natural and artificial flavors.” Calling the sugary condiment what it is — Aunt Jemima’s corn syrup — doesn’t sound quite as appetizing first thing in the morning. Aunt Jemima can’t even call it “maple” syrup, and instead just refers to it as “syrup,” hoping that consumers think maple rather than corn.

Kraft 100 Percent Grated Parmesan Cheese

Cheese that’s not actually cheese from our friends from Kraft. This stuff is so far from real Parmesan cheese that it can’t legally be labeled as such in Europe. Kraft lists the product’s only ingredient as being “Parmesan cheese” but then goes on to say that “Parmesan cheese” is made of milk, salt, and a whole bunch of additives. Cellulose (wood shavings) is added to keep the product’s powdery consistency, along with potassium sorbate and cheese cultures. As Forbes food writer Larry Olmsted points out in this in-depth article about Parmesan cheese, Kraft’s version is 100 percent grated but definitely not 100 percent cheese.

Jell-O

Jell-O isn’t vegan. It’s not even vegetarian. Jell-O is made of gelatin, which is itself made of the collagen found in animal protein. Sources of that animal protein include various combinations of bones, hooves, and skin from pigs, cows, and fish. While Jell-O has a strange cult fixation among the Mormon community, people who practice religions that inhibit the consumption of certain types of meat have to skip it entirely, as Jell-O packaging doesn’t specify what type of animal was used in the production of the gelatin. Some potential good news for Jell-O-loving vegetarians? There have been recent experiments with making gelatin from human DNA fragments.

Sunny Delight

Think Sunny D is just super sugary orange juice? Well, you’re right about the sugar, wrong about the juice. Sunny D and anything else labeled “juice drink” is not juice but high fructose corn syrup. The small amount of juice that’s in Sunny Delight comes under the “less than 2 percent” section and is a combination of orange, tangerine, apple, lime, grapefruit, and pear juice. Ninety-eight percent of the drink is just corn syrup and water. The drink’s vitamin C content is added artificially through ascorbic acid, and it’s rife with preservatives and artificial coloring. If you want to drink real fruit juice, choose an option labeled “100 percent juice.”

Oreos

Oreos are vegan. That’s right: Milk’s favorite cookie contains no dairy or eggs. The ingredient label starts with sugar and unbleached enriched flour, then goes on to list a plethora of unpronounceable chemical additives. Chocolate comes in last on the cookie’s long list of ingredients. Among other things, the cookies contain wheat and soy, but no dairy. So what is that creamy center made of, if not cream? The Oreo nutritional facts don’t make that very clear.

Cool Whip

Here’s another dairy-like substance that isn’t actually a dairy product. Skim milk comes in fourth on the popular whipped topping’s list of ingredients, behind hydrogenated vegetable oil, high fructose corn syrup, and corn syrup. Light cream only makes an appearance in the “less than 2 percent” section of the ingredients list — Cool Whip is not whipped cream. For a better whipped cream option, reach for Reddi-Whip instead, as real cream is the first ingredient on its label.

Velveeta

There’s been a lot of talk about a possible Velveeta shortage. The product melts smoothly to create a perfect cheesy base for queso or as a topping for nachos. But did you know that Velveeta isn’t actually cheese? It’s a cheese product. According to the FDA’s rules, Velveeta doesn’t qualify as dairy. Perhaps in lieu of the devastating shortage, you could reach for a block of real cheddar when making your queso and rest easy knowing that what you’re eating is actually food.

Early Evidence of Cannibalism

Cannibalism is a practice that we all hope to avoid, even if we can sometimes understand why people are forced to do it to survive. However, researchers have found that the earliest common ancestor of both modern humans and Neanderthals were cannibals just because they wanted to be. They had plenty of access to other meat, but still regularly ate the flesh of rival families. Cannibalism was also practiced by Neanderthals, but more often it was out of necessity.

There are times when we can understand why they had to do it, if it’s a matter of survival, that’s at least a rational explanation. But researchers have found that our abhorrence of eating human flesh wasn’t one that was shared by our earliest ancestors.

Homo antecessor is an early ancestor of modern humans, and the last fork in the tree before modern humans split from Neanderthals. They lived in Europe about one million years ago, and other members of the Homo antecessor group were a regular part of their diet.

This conclusion came from the excavation of a cave in Spain called “Gran Dolina,” which provided a largely untouched look at the life of our early ancestors. Showing that garbage can be very telling about the lifestyle of a group of people, the Homo antecessors’ garbage yielded not only broken tools, but the bones of animals and other Homo antecessor individuals that had been butchered in much the same way as the animals had been.

The bones of the other early humans didn’t just show signs of having died of old age, accidents, or death in battle. Long bones like arms and legs had been broken open so the marrow could be removed, and skulls had been smashed, presumably to get at the nutrient-rich brain. Marks on the bones matched those of the primitive tools and the marks on the animal bones.

The presence of these animals bones alongside the Homo antecessor remains is important for a couple of different reasons. Researchers were not only able to compare the damage that had been done to them to determine that they had been processed the same way, but it also suggests that they weren’t eating their neighbors just because they were starving. The regular, practiced signs of butchery and the number of bones found at the sight suggests that Homo antecessor ate their own kind simply because they were a good source of meat, like any other animal.

And it was a practice that was carried on by the Homo antecessors’ Neanderthal descendants as well. At another Spanish dig site, this one in the El Sidron caves, researchers from the Institute of Evolutionary Biology in Barcelona found a cache of Neanderthal bones that had been butchered and eaten. Ranging in age from young children to adults, the 50,000-year-old bones revealed that skulls had similarly been broken open, marrow extracted, and even the mouth broken open so the hunters could get to the soft parts of the mouth and tongue. The bones were unburnt, and the idea that they were eaten raw led them to believe that they had been starving.

That idea was further supported by examining the bones of the Neanderthal family that performed the cannibalism, along with other Neanderthal bones. The bones show signs of periods of starvation and malnutrition, suggesting that, unlike their ancestors, these progressives only ate their fellow Neanderthals when it was absolutely necessary for their own survival.

UN Report on Global Warming

The UN has issued yet another firm and fact-filled statement dealing with global warming: Extreme weather events in 2013 can largely be traced to global warming caused by human actions, stated in the newest Status of the Climate Report from the United Nations.

Michael Jarraud is secretary-general of the World Meteorological Organization. His group just released a new annual report on global climatic conditions. Extreme weather events were experienced in the United States, Europe, Asia and the Pacific last year.

According to the new assessment, 2013 was the sixth-warmest year on record. The 13 complete years since 2000 have made up all but one of the 14 hottest on record, according to the U.N. report. Australia recorded a new average high temperature, as did parts of Africa central Asia.

It will be interesting in the run up to, and the wake of, the release of the ultimate climate disaster movie how much wingnuts will try to debunk this study.

After all, with the release of the feature film, Noah, tens of millions of Christians will be watching a movie about a sudden, violent flood that wipes out all life on earth. It doesn’t really matter if God directs it or not, does it? It’s going to scare people.

See, it’s OK if you believe in God, or not, to believe that mucking up our home is going to have a backlash. God might step in, if you believe in the myth of God, but then he’s shown in the past he might not if he’s angry enough at us.

And if you don’t believe in God, but in science, well then it’s absolutely crystal clear, and will happen whether you agree with it or not. Humility should be the watchword of the day.

And the telling phrase in the report is this: A study of higher temperatures in Australia used nine separate computer models to determine if the phenomenon was due to man-made causes, or was a natural occurrence. Models revealed the record-high temperatures last year would have been nearly impossible without trapped greenhouse gases.